In this group project, we implement the Natural Language Processing (NLP) model for English to Mongolian translation. Today, sequence-to-sequence (seq2seq) models are becoming very widespread and provide very high quality and almost excellent performance in a wide variety of tasks. We used a deep neural network, sequence-to-sequence module was built using the PyTorch library, with an encoder, decoder, and attention. In other words, we can say that seq2seq models constitute a common framework for solving sequential problems, like Google Translate, online chatbots, text summarization, speech-to-text conversion, and speech recognition in voice-enabled devices.

Using Tab-delimited Bilingual Sentence Pairs data, we found that there are more than 70 language pairs available, where some of them with the most comprehensive vocabulary, somewhere with somewhat level of details, but also we found that there are no English-Mongolian pair in this dictionary. We recognize that on the general website of the project where there are more than 330 different language pairs, including Mongolian, but the data for this language is too small – around 550 sentences and the performance of the translation is so bad. Since that time, we decided to focus on this language pair and work on the seq2seq model.

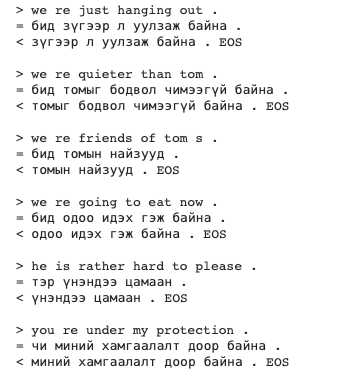

English sentences were translated manually by four people within a month since there is no training data for English-Mongolian sentence pairs. We got sentences from the Russian training data of the Tatoeba Project, and we selected the sentences with eight or fewer words. From those 16,000 sentences, we tried to translate longer sentences (more than four words) for training purposes. Finally, we got about 1,700 sentences translated. We checked each of them for a proper and correct translation. From that point, we are able to move forward with the model.

The encoder layer is bidirectional GRU cells with Embedding and Dropout, and the Decoder layer is GRU cells with Dropout and also contains the Attention layer, which is a Linear module. As far as we did not have training data for Mongolian translation, we wrote the translation network code using Turkish training data, thinking it might help with the overall network structure.

For the task of Neural Machine Translation on seq2seq model, we used Python and PyTorch library, and for GPU, we used Google Colab notebook. Google Collaboratory has an interface like the Jupyter notebook and works in Google drive. We used our school account to get access.

Before going to the model, we first went more profound to the field of linguistics. In terms of linguistic typology and classification of languages according to their structural and functional features, languages are divided into two main parts. Languages may be classified according to the dominant sequence of these elements in unmarked sentences.

Till getting the training data, we experimented with our last homework seq2seq model and Turkish training data. We picked the architecture because it was mentioned in the paper (Bahdanau, et al. 2014) that for longer sentences, it was performing well. In order to translate from SVO language to SOV language, the model needs to carry information until the end of the sentence.

Sources:

(1) Dzmitry Bahdanau, Kyunghyun Cho, Yoshua Bengio, “Neural Machine Translation by Jointly Learning to Align and Translate”, 2014, arXiv:1409.0473

(2) Sutskever I., Vinyals O., and Q. V. Le, “Sequence to sequence learning with neural networks,” in NeurIPS, 2014, pp. 3104–3112.

This group project was completed in 2019.

Tools used: [Deep Learning] [Python] [seq2seq] [PyTorch] [Natural Language Processing] [NLP] [AI]